Ever wondered how to make a computer yawn? Well, that’s exactly what my award-winning thesis at Iberspeech set out to explore! But let’s rewind a bit, shall we?

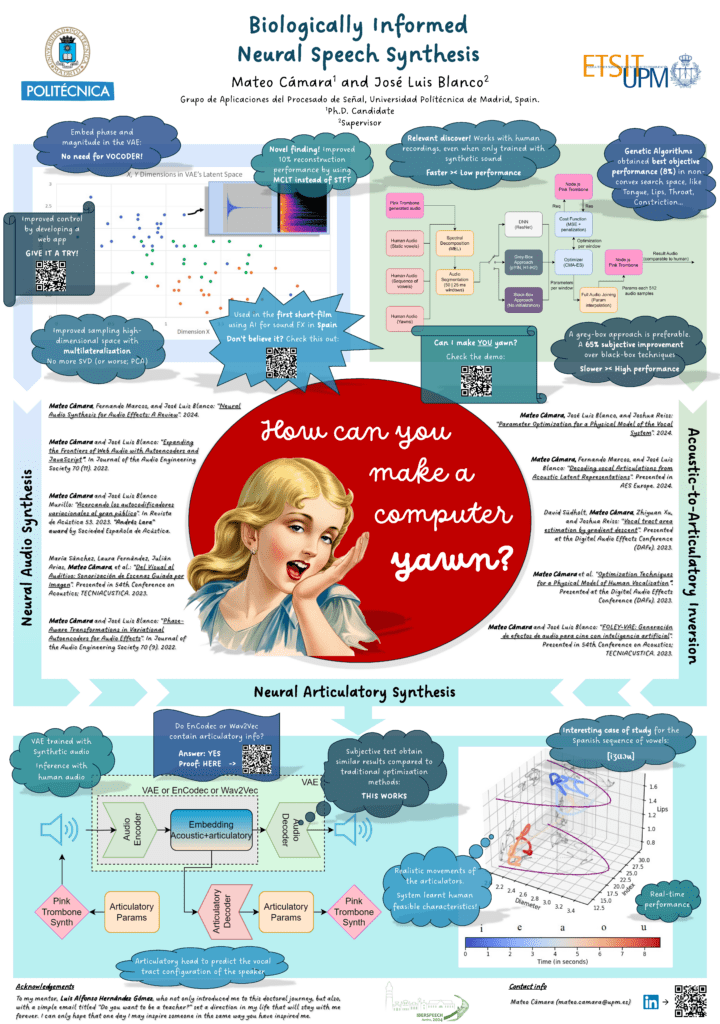

Looking at the top left of my poster, you’ll find where it all began – challenging the status quo in neural audio synthesis. You see, everyone was using vocoders for phase reconstruction in spectral-based VAEs. But I thought, “Why follow the crowd?” So, I developed my own phase embedding system. And why stick to STFT when we could explore other transforms? (Spoiler alert: the results were pretty amazing!)

Here’s a fun one: while others were using PCA to reduce latent space, I asked myself, “What if we did the opposite?” Taking inspiration from satellite geolocation, we expanded dimensions based on known parameters (an idea also awarded with the best paper at a conference). All this led to a web application where you could play with these concepts. And guess what? It contributed to the first Spanish short film ever to use AI-generated sound effects in its production. Don’t believe me? Check out the radio interview!

But the story takes an interesting turn in the top right corner. Here’s where I dove into acoustic-to-articulatory inversion (during my international visit in Queen Mary University of London) – basically, figuring out how the human vocal tract moves without poking around inside it. Imagine using a computerized model like Pink Trombone to mimic human sounds, like a yawn. Through analysis-by-synthesis (comparing human sounds with synthetic ones), we got some fantastic results using genetic algorithms. The only catch? It was slooow. A 10-second yawn took 10 minutes to synthesize!

Now, here’s where it gets exciting. I had knowledge of neural synthesis AND vocal tract biology—see where this is going? The bottom of the poster shows what I call articulatory neural synthesis. It’s beautifully simple: We use generative AI to create both audio and vocal tract configurations. The result? A shared embedding space that’s acoustically consistent and biologically informed. We even found biological traces in pre-trained models like Wav2Vec and EnCodec!

Does it work? You bet! Not only did we achieve real-time synthesis, but we also proved it wasn’t just making things up. Watch the i-e-a-o-u synthesis on the right – see how the tongue moves exactly where it should and the lips close at just the right moments.

So next time Rosita (the girl in the center of the poster) asks you how to make a computer yawn… well, now you know!

This journey led to my thesis being awarded Best Thesis at Iberspeech – an honor made even more special as I shared the stage with Luis Hernández, who received a lifetime achievement award. This success belongs to everyone: to Luis, to my supervisor José Luis, to my parents and brother, to my lab mates, and to my friends. A billion thanks to all of you!